A while ago, Mehta and Schwab drew a connection between Restricted Boltzmann Machine (RBM), a type of deep learning algorithm, and renormalization group (RG), a theoretical tool in physics applied on critical phenomena. [Mehta & Schwab, 2014; see previous entry] Can RG be able to relate to other deep leaning algorithms?

Schwab wrote a paper on a new machine learning algorithm that directly exploit a type of RG in physics: the density matrix renormalization group (DMRG). DMRG is used in condensed matter physics for low-dimensional (d=1 or 2) lattice systems. DMRG was invented by Steve White, using diagonalization of reduced density matrices on each site. [White 1992] However, now it was performed using singular value decomposition for each successive pair of lattice sites.

DMRG is related to quantum entanglement, which is a two-site quantum system, and the entanglement can be characterized by any of its reduced density matrix. However, DMRG deals with reduced density matrix of all sites. Traditionally, this kind of many body systems can be represented by the kets:

.

.

These c‘s are c-numbers. To describe the entanglement of these states but to remain numerically convenient, it is desirable to convert these c-numbers into matrices: [Schollwöck 2013]

.

.

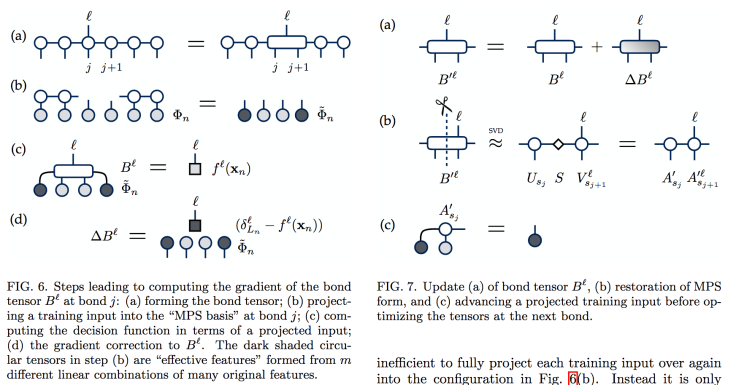

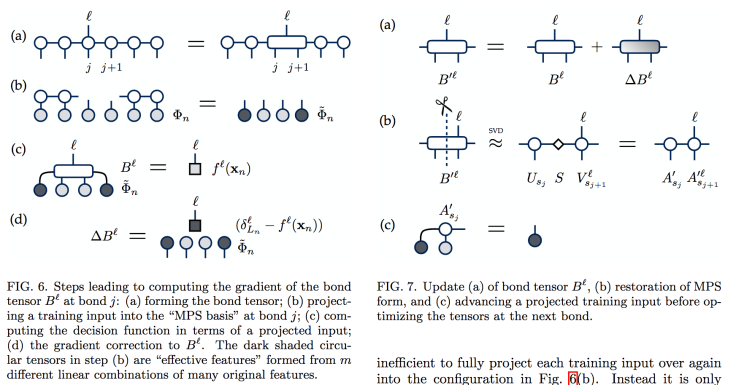

And these are tensor networks. DMRG aims at finding a good description of the states with these tensor networks. These tensor networks have nice graphical representation, as in the appendix of the paper by Stoudenmire and Schwab. The training is also described in their paper elegantly using these tensor network diagrams. Their new algorithm proves to be a good new machine learning algorithm, probably fit for small data but complicated features. This is a direct application of real-space RG in machine learning algorithm. Stoudenmire wrote in Quora about the value of this work:

“In our work… we reached state-of-the-art accuracy for the MNIST dataset without needing extra techniques such as convolutional layers. One exciting aspect of these proposals is that their cost scales at most linearly in the number of training examples, versus quadratically for most kernel methods. Representing parameters by a tensor network gives them a structure that can be analyzed to better understand the model and what it has learned. Also tensor network optimization methods are adaptive, automatically selecting the minimum number of parameters necessary for the optimal solution within a certain tensor network class.” – Miles Stoudenmire, in Quora

There are some extension algorithms from DMRG, such as multiscale entanglement renormalization ansatz (MERA), developed by Vidal and his colleagues. [Vidal 2008]

Steve R. White (adapted from his faculty homepage)

Tensor Diagram of the Training of this New Algorithm. (Take from arXiv:1605.05775)

Continue reading “Tensor Networks and Density Matrix Renormalization Group” →