Topology has been shown to reveal important information about geometry and shape from data, [Carlsson 2015][Carlsson 2009] as I have talked about in various TDA blog entries. I have also demonstrated how to describe the topology if discrete data points by constructing simplicial complexes, and then calculated the homology and Betti numbers. (I will talk about persistent homology in the future.) Dealing with discrete data points in our digital storage devices, homology is the best way to describe it.

But if you are from a physics background, you may be familiar with the concept of homotopy and fundamental group. Some physicists deal with topology without digging into advanced mathematical tools but simply through solitons. There is a well-written introduction in this blog. In the physical world, an object is said to be topological if:

- there is a singular point that cannot be removed by a continuous deformation of field; [Mermin 1979]

- it has a saddle-point equation of the model that is different from another object of another topology, [Rajaraman 1987] inducing different kinds of physical dynamics; [Bray 1994]

- it can only be removed by crossing an energy barrier, which can be described by an instanton; [Calzetta, Ho, Hu 2010]

- it can proliferate through Kosterlitz-Thouless (BKT) phase transition; [Kosterliz, Thouless 1973]

- it can form in a system through a second-order phase transition at a finite rate, a process known as Kibble-Zurek mechanism (KZM); [Kibble 1976] [Zurek 1985] and

- its topology can be described by a winding number. (c.f. Betti numbers in homology)

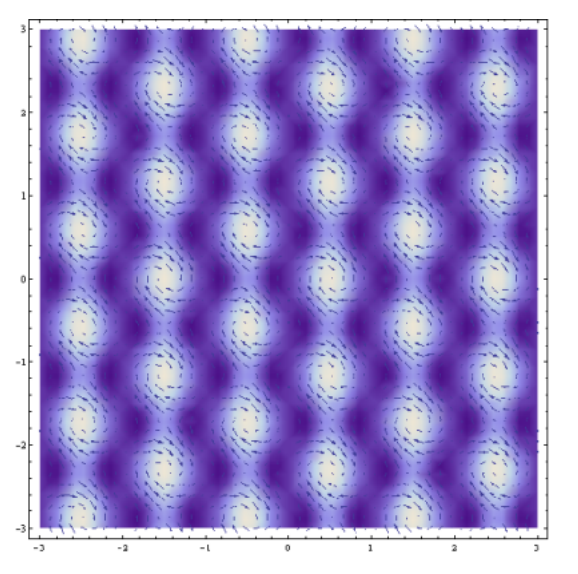

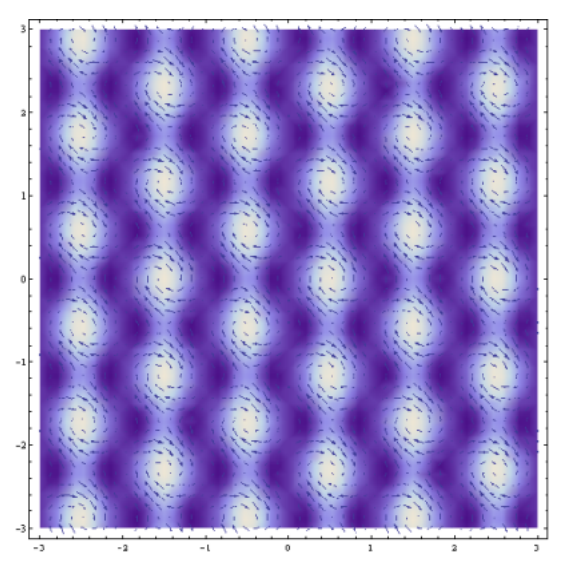

Topological objects include vortices in magnets, superfluids, superconductors, or Skyrmions in helimagnets. [Mühlbauer et. al. 2009] [Ho et. al. 2010] They may come in honeycomb order, like Abrikosov vortices in type-II superconductors, [Abrikosov 1957] and helical nanofilaments in smectics. [Matsumoto et. al. 2009] It is widely used in fractional quantum Hall effect [Tsui et. al. 1982] and topological insulators (a lot of references can be found…). They can all be described using homotopy and winding numbers. We can see that topology is useful to describe the physical world for the complexities and patterns. There are ideas in string-net theory to use topology to describe the emergence of patterns and new phases of quantum matter. [Zeng et. al. 2015] Of course, I must not omit topological quantum computing that makes the qubits immune to environmental noise. [Das Sarma, Freedman, Nayak 2005]

However in data analytics, we do not use homotopy, albeit its beauty and usefulness in the physical world. Here are some of the reasons:

- In using homotopy, sometimes it takes decades for a lot of brains to figure out which homotopy groups to use. But in data analysis, we want to grasp the topology simply from data.

- Homotopy deals with continuous mappings, but data are discrete. Simplicial homology captures it more easily.

- In a physical system, we deal with usually one type of homotopy groups. But in data, we often deal with various topologies which we are not aware of in advance. Betti numbers can describe the topology easily by looking at data.

- Of course, homotopy is difficult to compute numerically.

Afra Zomorodian argued the use of homology over homotopy in his book as well. [Zomorodian 2009]